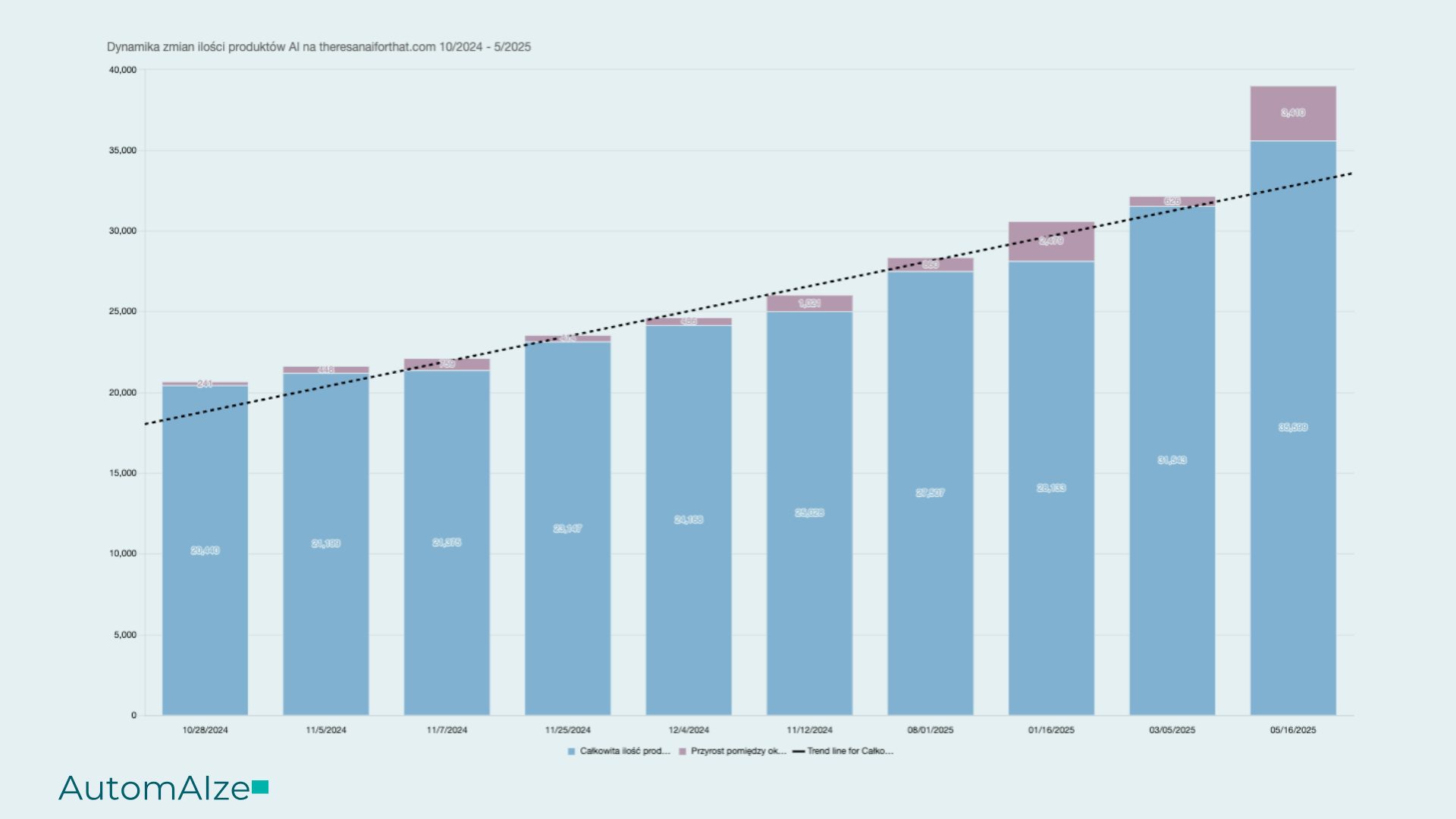

In an era where most leading AI products are developed in the USA, around 100 new products hit the market daily, and we have very limited ability to control these products - there are really many risks - data, financial, or reputational.

The answer to these challenges is the AI Policy. In this article, you will learn:

- What an AI Policy is and why it matters

- The key focus areas to address

- How to start implementing the policy step by step

- Which tools and standards to use

What is an AI Policy?

An AI Policy is a formal company document that defines:

- how AI can be used,

- which tools are allowed,

- and what procedures apply for responding to incidents and risks.

It serves as a guide for responsible, compliant, and secure use of AI. The main objectives of an AI Policy include:

- Data protection and privacy,

- Transparency in operations (e.g. labeling AI-generated content),

- Risk and incident management,

- Building trust with clients, partners, employees, and society.

An AI Policy is essential for organizations adopting AI, as it helps foster trust and establish a solid foundation for responsible AI use. Companies also implement AI policies to comply with regulations such as the EU AI Act, NIST AI Risk Framework, ISO-42001, ISO-23984, Responsible AI institute or ISO-5338

It is especially important when it comes to the use of third-party AI products and setting clear boundaries around their availability and application.

Let’s now explore the critical areas of an AI Policy and how they are addressed in the standards mentioned above. We’ll focus primarily on the perspective of an AI consumer — an organization that uses off-the-shelf AI products available on the market.

Key Areas of an AI Policy Document

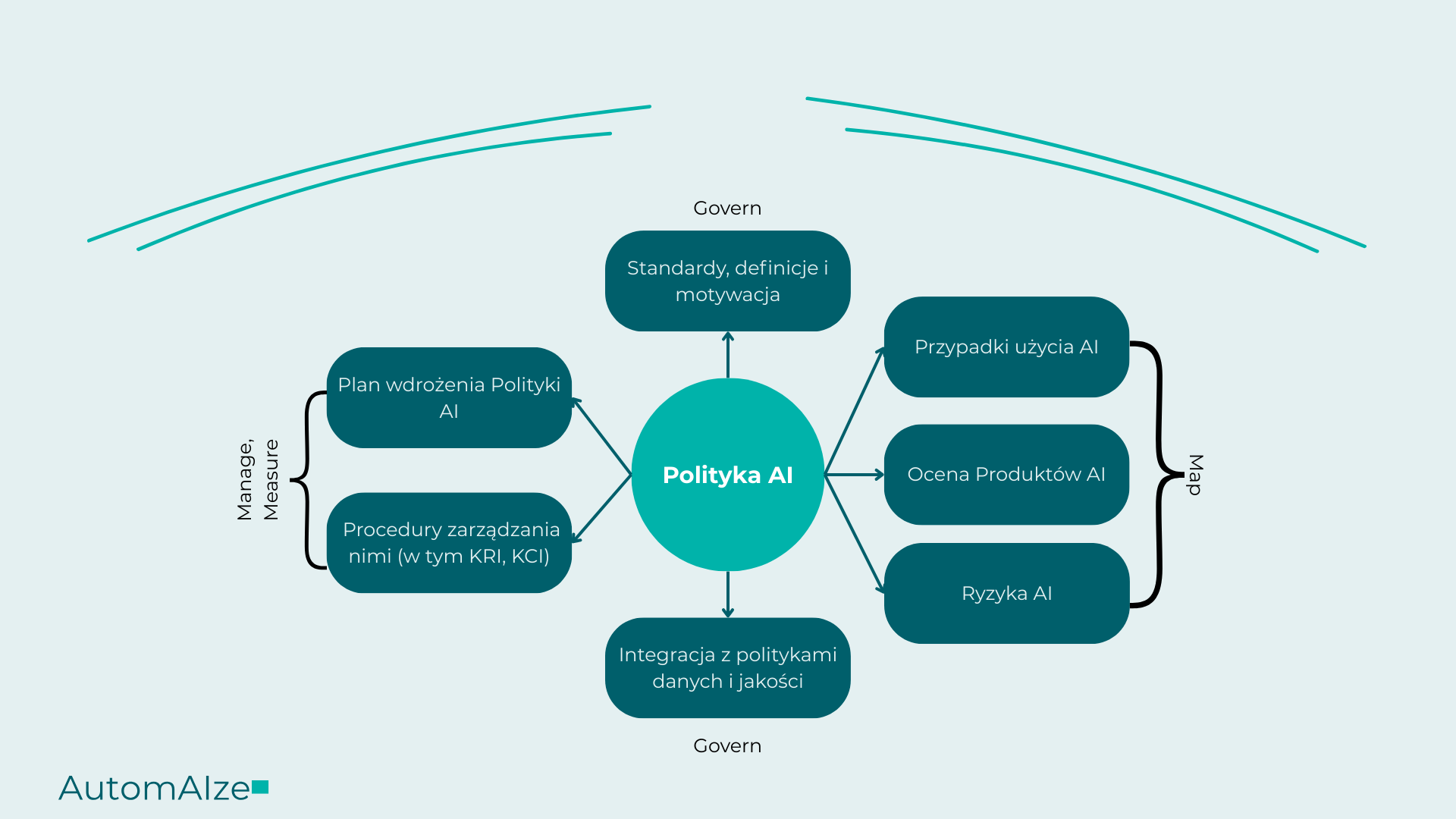

Govern - Map - Measure - Manage

The Responsible AI Institute outlines 14 essential components of an AI policy, such as: justification, scope, principles, goals and strategy, corporate governance, data and risk management, project and stakeholder management, regulatory compliance, procurement processes, documentation, and policy enforcement.

To simplify implementation, we recommend using the NIST AI Risk Management Framework (AI RMF) – Govern → Map → Measure → Manage – which we also apply in our own projects:

Govern – Oversight and Accountability

- Establish an AI Committee

- Appoint a policy owner (e.g. CTO, Quality Leader)

- Define roles and responsibilities

Map – Identifying AI Usage

- Create a list of AI tools used within the organization

- Assess AI use cases

- Classify them according to the EU AI Act (high-risk, limited-risk, prohibited)

Measure – Monitoring and Evaluation

- Define KRI (Key Risk Indicators) and KCI (Key Control Indicators)

- Establish incident response procedures

- Conduct compliance audits and reporting

Manage – Execution and Enforcement

- Define risk management procedures Integrate

- AI policy with privacy and data governance policies

- Train employees (e.g. via eLearning)

This type of policy can be extended to include both regulatory requirements and the specific needs of your organization — always with a focus on practical implementation.

AI policy: approach

Minimal control, maximum value

The availability of AI products is practically limitless. As we emphasized in the article "How to start implementing AI in a company in 2025?" approximately 100 products are created daily, which are available for free / in the form of a subscription. This situation exposes the organization to significant risks of using inappropriate information in the wrong system, such as personal data in an unsecured AI product or sending sensitive software code that will then be accessible to other users. We will discuss more in the next section, but from the perspective of employees within the company, the most important thing is to:

- Which AI tools are approved for use in the company

- Which tools are prohibited

- That each employee is responsible for the predictions / results / content generated by AI

- Whether any automated decisions are made within the company

- Where to report if policy rules are violated Who is responsible for the AI Policy

- What control and monitoring mechanisms are in place

- Whether and how AI use is subject to regulatory compliance

- What the incident response procedure looks like

- Incident Response Procedure

Such basic guidelines and principles allow interested parties to navigate their actions. It is also worth referring to the available Responsible AI principles from companies such as Microsoft, AWS, Google, or OECD.

AI Policy: Govern

Effective AI management starts with assigning responsibility. It is advisable for a company to establish an AI Committee that evaluates products, approves use cases, and responds to incidents. A specific owner of the AI policy should be responsible for the overall management – for example, the CTO, the director of quality, or the compliance officer.

AI policy should clearly indicate who can use AI and under what conditions. Employees must understand that every AI prediction requires human verification, and full automation of decisions is not permissible without exceptional safeguards.

Data is a critical resource. The policy should specify which data sources can be used with AI (e.g., public data, customer data, data from ERP systems) and what restrictions apply (e.g., no processing of PII in cloud tools). Good governance starts with clear rules for access and use of information.

AI Policy: Map

The second pillar is mapping the use of AI in the company. Without this, it's hard to talk about management – you don't know where the risks are, who is using AI, and whether they are doing so in accordance with the policy.

AI use cases

Start by creating a list of AI applications in the company: whether it's HR support in recruitment, automation of sales offers, or code assistants in IT. For each case, specify:

- who uses AI and for what purpose,

- what data is being processed,

- is there a risk of automatic decision-making.

Ocena produktów AI

Not all tools are safe. Map the products used (e.g., Microsoft Copilot, Perplexity, or ChatGPT) and check:

- control over data in the product

- can a DPA - data processing agreement be signed

- does the product store data in the EU

- what security certifications does the AI product have

AI Risks

For each AI use case, define the risks. Also classify these cases according to the EU AI Act:

- prohibited (e.g. social scoring, facial recognition)

- high (e.g. AI in recruitment, employee evaluation),

- limited (e.g. AI in marketing),

- low (e.g. AI for internal meeting summaries).

This is the foundation for further actions in the field of management and control.

AI Policy:

Measure

The third pillar is monitoring – because even the best rules mean nothing if you don't know whether they are being followed. For this purpose, it is worth implementing specific indicators and a response system.

KRI – Key Risk Indicators

Risk indicators allow for the prediction of threats. Examples:

- % of employees using unapproved AI tools,

- the number of incidents involving data in AI products,

- the number of AI use cases without human verification.

KCI – Key Control Indicators

Control indicators show whether the organization operates in accordance with the policy. Examples:

- % of AI cases marked "generated by AI",

- % of employees trained on the AI Policy,

- % of approved AI tools with a signed DPA.

Incident Monitoring

Every AI incident (e.g., sending data to ChatGPT, hallucinations in documentation, incorrect predictions) must be:

- reported (e.g., by email or through a form),

- registered in the incident system,

- analyzed and categorized (e.g., reputational, financial, compliance with ISO-9001/13485).

It is also worth preparing a simple response plan – who does what and in what time frame, e.g., 48 hours to contact the client, 24 hours to report to the AI team.

AI Policy: Manage

The last pillar is action – that is, how to implement the AI Policy in practice and ensure that it does not remain just "on paper."

Risk management procedures

The organization should implement a process for evaluating and approving new AI use cases – preferably in the form of regular meetings of the AI Committee team.

For each new tool or case, it is necessary to:

- assess the risk (in accordance with the EU AI Act),

- approve or reject the implementation,

- determine the method of monitoring,

- apply preventive methods to risks.

At the same time, the company should maintain a list of "permitted" and "prohibited" AI tools, in accordance with privacy policy and internal rules.

AI Policy Execution Plan

AI policy must have an implementation plan. In practice, this means:

- Training for all employees (e.g., in the form of e-learning)

- Implementation of technical mechanisms: restricting access to unapproved tools, logging AI usage, tagging AI-generated content

- Regular review and update of the policy (e.g., once every 6 months)

A well-functioning AI policy is one that every employee knows and applies – from the sales department to R&D.

What does AI Policy in a Company provide?

Implementing an AI Policy is real value – not just formal compliance with regulations, but also specific organizational improvements:

✅ Clear roles and responsibilities – it is known in the company who is responsible for the assessment, implementation, and control of AI (AI Committee, policy owner)

✅ Awareness of risks – the organization is aware of the threats associated with AI, can monitor them, and minimize them

✅ Safe implementations – products such as code assistants, content generation tools, or chatbots are used in accordance with policies and regulations (e.g., EU AI Act)

✅ Incident readiness – the team knows how to respond to breaches, hallucinations, incorrect predictions, or data processing issues

✅ Increase in trust – among customers, partners, and employees, that the company uses AI responsibly and transparently

Who is the AI Policy for?

Any company can start, but we particularly recommend it for organizations that:

- they use more than one AI tool in their daily work

- operate in a regulated sector (e.g., medtech, fintech, HR)

- they plan to develop their own AI models or decision-making systems

- process personal data, sensitive information, or intellectual property of clients

- they want to control productivity and safety in working with AI

Main questions regarding the AI Policy in the company

🔹 ISO/IEC 42001 (AI Management System)

🔹 NIST AI Risk Management Framework

🔹 EU AI Act + Compliance Checker

🔹 Responsible AI Institute (policy and rules template)

Contact us here, and we will send you the template.

Privacy policies, Terms of use, "Trust centers," or product documentation.

Summary: Is it worth implementing an AI Policy in the company?

In a world where dozens of new AI tools emerge every day, and regulations are growing faster than technological capabilities – the lack of clear rules is a real risk.

AI policy is a way to organize chaos. It helps define what tools your employees can use, who is responsible for their actions, and how to respond when something goes wrong.

Thanks to policy, your company protects data, operates in compliance with regulations, and can safely scale AI implementations. Equally important – you build trust: with customers, partners, and your team. In 2025, this is not a luxury. It’s a necessity.

If you want to implement AI safely – start with an AI policy.

This is the first step towards the responsible, effective, and sustainable use of artificial intelligence in your organization.